This post is continuation to Browser automation in Excel using Selenium VBA. Have a look at that post first as it explains how to get started. This post is all about how to scrape website using selenium VBA in Excel and different methods that are used to scrape data and best practices for scraping.

Scrape a blog

Lets get started with a simple example. Lets scrape posts data from this blog codingislove.com. We’ll be scraping post title, URL, Category and Published date.

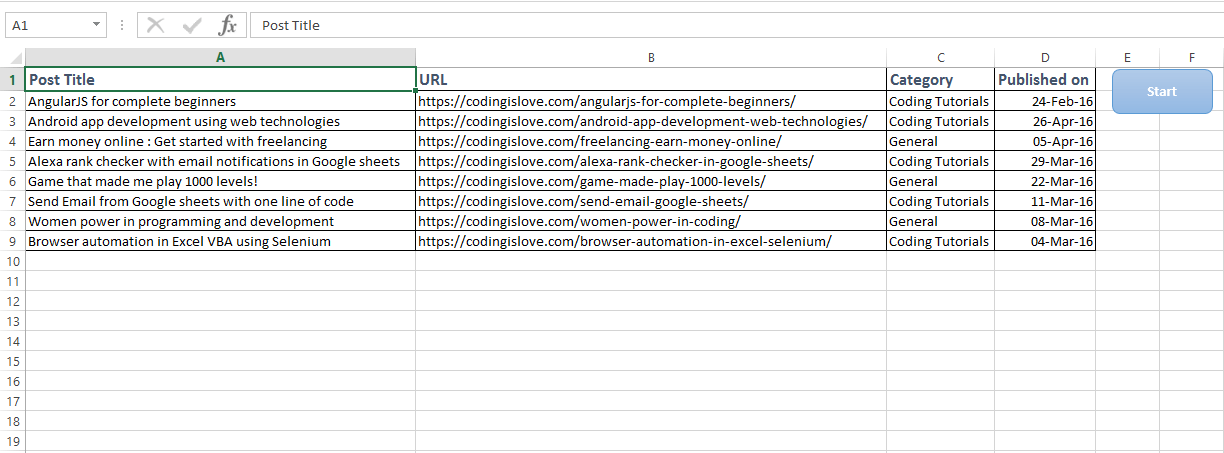

Scraped data looks like this :

Simple goal, Go to codingislove.com and scrape data. Lets have a look at code once.

Public Sub scrapeCIL()

Dim bot As New WebDriver, posts As WebElements, post As WebElement, i As Integer, mysheet As Worksheet, keys As Selenium.keys

bot.Start "chrome", "https://codingislove.com"

bot.Get "/"

Set posts = bot.FindElementsByTag("article")

i = 2

Set mysheet = Sheets("Coding is Love")

bot.Mouse.moveTo bot.FindElementByClass("pagination")

'Selenium can only view visible elements. I have added animations to my homapage which will reveal posts only when scrolled to them. So scroll to bottom of the page first then find elements.

For Each post In posts

mysheet.Cells(i, 1).Value = post.FindElementByClass("entry-title").Text

mysheet.Cells(i, 2).Value = post.FindElementByClass("entry-title").FindElementByTag("a").Attribute("href")

mysheet.Cells(i, 3).Value = post.FindElementByCss(".first-category > a").Text

On Error Resume Next 'Sticky posts don't have published date so ignore the error if not found

mysheet.Cells(i, 4).Value = post.FindElementByClass("published").Text

On Error GoTo 0 'Add back error handling

i = i + 1

Next

bot.Quit

End Sub

Code explanation

- First, we start a web driver, go to codingislove.com homepage.

- Extract all posts to

postselement usingFindElementsByTagmethod as all posts have a HTML tag namedarticlein this blog. We can look up HTML elements of a page using Right click > Inspect element in chrome. I’ve definedpostsas WebElements.FindElementsByTagmethod extracts all elements with specified tag. - mysheet is defined as a worksheet, set it to sheetname to which date needs to be extracted. In this case my sheet name is

Coding is Love - Now loop through each post in

postsand extract post data to mysheet. I’ve used an integerito loop through cells in sheet. - Post title element has a class name of

entry-title, post date has a class name ofpublishedso we useFindElementByClassmethod to extract them. - Post URL is available in

hrefattribute ofatag so we useattribute("href")to get post URL. Post category has a class namedfirst-categoryso we use CSS selectorFindElementByCss(".first-category > a")which means find element withatag in element with class namedpost-meta. Read more about CSS selectors here : Getting started with CSS Selectors

Thats it, we’ve scraped simple data from a website using selenium VBA.

Scrape products from E-commerce website

Recently one of the readers of this blog has asked me how to scrape product data from an e-commerce website using selenium VBA. I’m sharing the same code now.

Goal is to extract products from this webpage https://expert.no/tv-og-tilbehor/tv/pl-1862/

Note : This example is purely for learning purpose and not intended for stealing data

Lets have a look at code once.

Public Sub expertscraper()

Dim bot As New WebDriver, myproducts As WebElements, myproduct As WebElement, i As Integer, productnum As String, clicknum As Integer, mysheet As Worksheet

bot.Start "chrome", "https://expert.no"

bot.Get "/tv-og-tilbehor/tv/pl-1862/"

bot.Window.Maximize

i = 2

Set mysheet = Sheets("example2")

Set myproducts = bot.FindElementsByClass("product-container")

For Each myproduct In myproducts

If myproduct.FindElementByClass("product-name").Text <> "" Then

mysheet.Cells(i, 1).Value = myproduct.FindElementByClass("product-name").Text

mysheet.Cells(i, 2).Value = Replace(myproduct.FindElementByClass("product-description-bullets").Text, vbLf, ",")

mysheet.Cells(i, 3).Value = myproduct.FindElementByClass("product-price").Text

i = i + 1

End If

Next

MsgBox ("complete")

End Sub

Code explanation

Above code is pretty much the same as in the previous example. Go to webpage, extract all products, loop through each product and extract data to sheet.

But there is a problem. When page loads first, only 36 products are loaded into page, more products are loaded when see more button in clicked at the end of the page. Every time see more button is clicked, 36 more products are added. Also total number of products is not same always. So we’ve to figure out a way to automate extracting all products without any manual intervention.

I used smart calculations there.

productnum = bot.FindElementById("product-list-count").Text 'find total number of products

productnum = Mid(productnum, 1, Application.WorksheetFunction.Search(" ", productnum) - 1)

clicknum = Round((CInt(productnum) - 36) / 36, 0) 'find number of times more products has to be clicked to get all products

For i = 1 To CInt(clicknum)

bot.FindElementByXPath("//*[@id='product-list-load-more']/div/button").Click

bot.Wait 1000

Next

Total count of products is displayed in the webpage when loaded first, So I extracted total count of products first, subtracted 36 from it and divided by 36 and rounded it to get total number of clicks to be made on see more button to get all products on the page. Then we go for normal loop to extract all the products to excel sheet.

Final code looks like this :

Public Sub expertscraper()

Dim bot As New WebDriver, myproducts As WebElements, myproduct As WebElement, i As Integer, productnum As String, clicknum As Integer, mysheet As Worksheet

bot.Start "chrome", "https://expert.no"

bot.Get "/tv-og-tilbehor/tv/pl-1862/"

bot.Window.Maximize

productnum = bot.FindElementById("product-list-count").Text 'find total number of products

productnum = Mid(productnum, 1, Application.WorksheetFunction.Search(" ", productnum) - 1)

clicknum = Round((CInt(productnum) - 36) / 36, 0) 'find number of times more products has to be clicked to get all products

For i = 1 To CInt(clicknum)

bot.FindElementByXPath("//*[@id='product-list-load-more']/div/button").Click

bot.Wait 1000

Next

bot.Wait 500

i = 2

Set mysheet = Sheets("example2")

Set myproducts = bot.FindElementsByClass("product-container")

For Each myproduct In myproducts

If myproduct.FindElementByClass("product-name").Text <> "" Then

mysheet.Cells(i, 1).Value = myproduct.FindElementByClass("product-name").Text

mysheet.Cells(i, 2).Value = Replace(myproduct.FindElementByClass("product-description-bullets").Text, vbLf, ",")

mysheet.Cells(i, 3).Value = myproduct.FindElementByClass("product-price").Text

i = i + 1

End If

Next

MsgBox ("complete")

End Sub

Best practices of scraping data in excel

Using Selenium is not the best method to scrap data always. It totally depends on the webpage and amount of data to be scraped. For instance, In the above example where we scrape products, Selenium is not the best method for that. That website pulls data from their Json API which is available at https://www.expert.no/Umbraco/Api/Product/ProductsByCategory

Simple HTTP POST requests to their API would be the best method to scrape that website. It hardly takes 5 seconds to scrape 240 products using API method whereas it takes about a minute to scrape the same using selenium VBA and also hangs a bit as excel is single threaded.

Code for API method :

Public Sub apimethod()

Dim xmlHttp As Object, api As String, Json As Object, response As Object, postdata As String, i As Integer, mysheet As Worksheet

myurl = "https://www.expert.no/Umbraco/Api/Product/ProductsByCategory"

postdata = "{'SiteId':2,'CampaignId':0,'CategoryId':1862,'StartIndex':0,'NumberToGet':239,'SortId':5,'IsLoadMore':true}"

Set xmlHttp = CreateObject("MSXML2.serverXMLHTTP")

xmlHttp.Open "POST", myurl, False

xmlHttp.setRequestHeader "Content-Type", "text/json"

xmlHttp.Send postdata

Set Json = JsonConverter.ParseJson(xmlHttp.responseText)

Set mysheet = Sheets("API Method")

i = 2

For Each product In Json("Products")

mysheet.Cells(i, 1).Value = product("Title")

mysheet.Cells(i, 2).Value = product("ShortDescription")

mysheet.Cells(i, 3).Value = product("ManufacturerName")

mysheet.Cells(i, 4).Value = product("Price")

i = i + 1

Next

End Sub

That website uses JSON API and excel doesn’t have built-in support for parsing JSON so I’ve used a JSON Parsing library which is available here – VBA-JSON

Also, In the first example where we scrape blog post data, simple HTTP GET requests can be made to blog homepage and scrape the HTML response.

Wrapping up

I’ll be explaining in-depth about when to use basic HTTP requests, when to use selenium VBA and when to use APIs and how to use them in another post. It requires some basic understanding about client side rendering, server-side rendering, APIs etc. Meanwhile download excel file with above examples here and play with selenium VBA.

Update : Read best practices of scraping here

Also, read Parse HTML in Excel VBA – Learn by parsing hacker news home page

If you have and questions or feedback, comment below.

- Ultimate Guide: Build A Mobile E-commerce App With React Native And Medusa.js - February 15, 2025

- Flutter lookup failed in @fields error (solved) - July 14, 2023

- Free open source alternative to Notion along with AI - July 13, 2023

Hi,

HTML code:

Select Part

1

2

3

4

5

6

7

8

9

10

Show Entries

Total : 461 nbsp; Processed : 461

The photo matching process for all the entries in this part is completed

how to scrape total andf processed values.

Thanks

Hi, I cannot tell without looking at the HTML code, But you can just use any selector for the elements with total and processed values. You can use Id, name,classname,tag or xpath as selector.

I’m really enjoying the theme design of your site.

Hi Ranjith,

How can i get values from textboxes from already opened webpage in chrome.

Could you please help me on how to do it using vba, i wanted textbox values to be copied to excel

Regards,

Sugat

Hi Sugat,

Unfortunately, Selenium cannot control an existing or already opened browser. You have to open the web page using selenium. In your case, next best option would be to develop a chrome extension which exports values from a web page to excel.

any idea if we can do it offline like using “MSXML2.ServerXMLHTTP”…. i am begginer of VBA but just lookign if any solution. Currently user are inputing values in textboxes in a webpage and same data they have to copy in a excel sheet. So any better way to achive it

Is the web page owned by you? If yes then you might be storing the data in a database which can be pulled from excel using XMLHTTP. I doubt if there is any other option. Do you have a link to the web page?

No webpage is not owned by me. my team work do some processing activity in that. So they are currently openign the site in chrome and follow certain process to navigate to particular page. So we need a code so that data should be copied from already opened webpage to excel

In that case, Building a custom browser extension according to that webpage is the only option available. Feel free to start a discussion on our forum with further details if you need any other help.

Could you pls explain how you figured out the items in the line postdata = “{‘SiteId’:2,’CampaignId’:0,’CategoryId’:1862,’StartIndex’:0,’NumberToGet’:239,’SortId’:5,’IsLoadMore’:true}” ?

By Inspecting XHR requests made by the webpage. Requests can b inspected using network tab chrome developer console. Read about client side rendering here – https://codingislove.com/best-practices-scraping-website-data/

Please suggest me the selenium web driver that supports Microsoft object library 16.0.

Hi Rajesh, The latest version of Selenium wrapper should work with Microsoft object library 16.0

What error are you getting?

Hi Ranjith,

Could you pls explain that how can we do switch window from browser page to download pop up and focus goes back to browser.. we tried many options but No luck.. can you pls..

Thanks in advance!..:)

Hi,

Did you mean the SaveAs dialog? There’s no way to control that using selenium. But you can use timeouts and keystrokes as a workaround.

Hi,

Could you please show an example of automating the Save As to save a file? Can you also specify a download folder or not?

Many thanks,

Q

Hi I am trying to get some help in MoveToElement. Since the elements are not visible and giving as IsDisplayed=”False”. I have successfully found the xPath but and it gives me IsPresent in Watch Window, but MoveToElement is not working as it is giving me syntax error. Would request you to help me with this.

Declaration

Dim element as WebElement, actions as Selenium actions

Code is

WebElement abc = element.FindElementByXpath(“//CorrectPath”)

actions.MoveToElement(abc)

Hi, Check the updated code. It has a moveToElement method.

Hi..

I am trying to open a secure webpage in IE explorer. I am getting runtime overflow error -6.

Dim IE As New IEDriver

IE.Start “iexplore”, “https://ccbcat1.in.telstra.com.au/ccopenui_enu/start.swe?SWECmd=Login”

IE.SetCapability CapabilityType.ACCEPT_SSL_CERTS, True

IE.Get “/”

Can you please help if this issue with IE?

Thanks

Thanks Ranjith for the all the great tips.

Just learning and trying scrapeCIL(). I note that this example doesn’t seem to work now that your website has changed.

In the example:

mysheet.Cells(i, 1).Value = post.FindElementByClass(“post-title”).Text

mysheet.Cells(i, 2).Value = post.FindElementByClass(“post-title”).FindElementByTag(“a”).Attribute(“href”)

I replaced “post-title” with “entry-title” and that works for href, but I can’t see how to pull the title down.

Trying:

mysheet.Cells(i, 2).Value = post.FindElementByClass(“entry-title”).FindElementByTag(“a”).Text

…but this doesn’t seem to work as there’s no id for the title.

It would be great if you’re able to show scrapeCIL() using the revised format of codingislove.com

Thanks

Having the same issue — can’t get this to work with the new class names.

Please update code.

Hi, Check the updated code now.

Hi, Check the updated code now.

Hello Sir,

I am struggling with the bellow error for a few days but could not find any solution.

Can you please have a look on my issue.

Error:

Run-time error “0”,

webRequestError

The underlying connection was closed: An unexpected error occurred on a receive.

I am using latest version of chrome , Firefox and Selenium VBA wrapper library .

Hi Atik, Try manually updating the chrome driver. Check the discussion here – https://codingislove.com/browser-automation-in-excel-selenium/#comment-519

Hi Sir,

I have a doubt using Selenium in VBA. I want to select from dropdown value-1. the http code is as below:

</option><option value="1">US</option><option value="6">JP</option>

<option value="7">CA</option>

<option value="4861">ENDLESS</option>

<option value="526970">BR</option>

<option value="771770">MX</option>

<option value="157860">MYHABIT</option>

<option value="926620">QUIDSI</option>

<option value="1071830">Panda02</option>

<option value="1259700">Panda04</option>

</select>

Sorry the correct http codes are as below:

<select id=”marketplaceId” name=”marketplaceId>

US

JP

CA

ENDLESS

BR

MX

MYHABIT

QUIDSI

Panda02

Panda04

Please help. I have many more questions following. :p

How is it in the examples given above that bot.Mouse.MoveTo bot.FindElementByClass(“pagination”) can move to the right place even though the actual class name is “navigation pagination” please?

There’s also another similar project one can try: https://github.com/daluu/seleniumexceladdin

FYI, there is also a VBScript alternative to Selenium VBA: http://htejera.users.sourceforge.net/vbswebdriver/

Hi Ranjith,

I am trying to run this program. The browser is opening but page is not loading and I am getting the application defined/ object defined:

Sub testsel()

Dim bot As New WebDriver

Dim rng As Range

Set rng = ThisWorkbook.Worksheets(“report”).Range(Worksheets(“Report”).Range(“D12”), Worksheets(“Report”).Range(“D12”).End(xlDown))

bot.Start “Firefox”, “https://mymetrix.comscore.com”

bot.Get “/app/”

bot.Window.Maximize

bot.FindElementByName(“unameTbx”).SendKeys (“amz_srajagopalan”)

bot.FindElementById(“__pwdTbx”).SendKeys (“nikh060911!”)

bot.Wait 0.1

‘bot.Quit

End Sub

could you please help me with this ?

Hi Ranjith!

Many thanks for your very clear tutorial.

Would it be possible to have the exact first same code (scrape a blog) but hiding the browser window ? It seems tricky because the code relies on the mouse moving so on visible elements…. but who knows, maybe it is possible…

I saw the PhantomJSdriver was included in Selenium Basic but not sure what to do with that…

Many thanks in advance and have a good day!

Kumar,

I am trying to use selenium/VBA to select a dropdown but I am facing this issue for all the field in the page that I am trying to reach. I have tried to do that, by name, id, xpath. All of them is giving run-time error 7 saying that the element could not be found. Find below the partial piece of the page code. I was able to control the login page but when it changes to the form page I cannot reach any field or drop down menus.

Direct Withdraw

Inter-company Stock Transport

Inter-Company(No Sales Order)

Inter-Company(With Sales Order)

Other

Outside Processing

Outside Processing (Media)

RTV Consigned (122K)

RTV Manual (Z71,Z73)

RTV Standard (122)

Sales Order

</select>

Thank you

Hi,

Thanks for the tutorial and example, I’ve really enjoyed them.

It looks like they’ve changed the website a little. I got it all to work except for the line to click the button:

bot.FindElementByXPath (“//*[@id=’product-list-load-more’]/div/button”).Click

I keep getting the error message “No such element error”. Any ideas about what I could do to address that?

(Apologies, I’m pretty new to this).

Thanks,

Hi,

I changed the code a little to:

bot.FindElementByClass(“btn-brand”).Click

It now finds the element, but cannot click it – it throws the error “ElementNot Visible EWrror”, element not interactable

Do you know how I could fix that?

Thanks,

In the first code, when I copy the code and paste into excel VBA then run it, error occurred “type mismatch” runtime error ’13’ at Set posts = bot.FindElementsByTag(“article”) . My windows version is 10 and office 2019, Selenium Library v2.0.9.0

Hi,

Please could you help on how to push the information from excel to web page by HTML elements using selenium VBA

Hi, I’ve written an article on that already – https://codingislove.com/browser-automation-in-excel-selenium

Hi, I ran the code “

PublicSubscrapeCIL()” in edge. Got NoSuchElementError on the line “mysheet.Cells(i, 1).Value = post.FindElementByClass(“entry-title”).Text”. How do i solve this?That means that element is not found. This was an old article. That website’s HTML elements might have changed now. You should modify the code to work with the latest website.

Thank a lot for such Ste-by-Step Examples. Very helpful for the beginners. It is far useful than VDO.

Your post on .Attribute(“href”) was most useful and working. I did not get the right syntax even after going through at least ten other websites. So all the credit goes to you. I have saved this url for future. Thanks once again.

Glad it helped 🙂